Ask us how we can help you succeed.

By Alan Lehane, Developer

Category: Expertise strategy

In this blog, I give a simple overview of the decision trees, how they work and how they are created.

By Alan Lehane, Developer

The Machine Learning Blog Series so far has discussed Logistic Regression and Linear Regression Machine Learning Algorithms.

These Algorithms are Linear, in this article I am going to introduce an example of a non-linear Algorithm, Decision Trees.

The Machine Learning Blog Series so far has discussed Logistic Regression and Linear Regression Machine Learning Algorithms.

These Algorithms are Linear, in this article I am going to introduce an example of a non-linear Algorithm, Decision Trees.

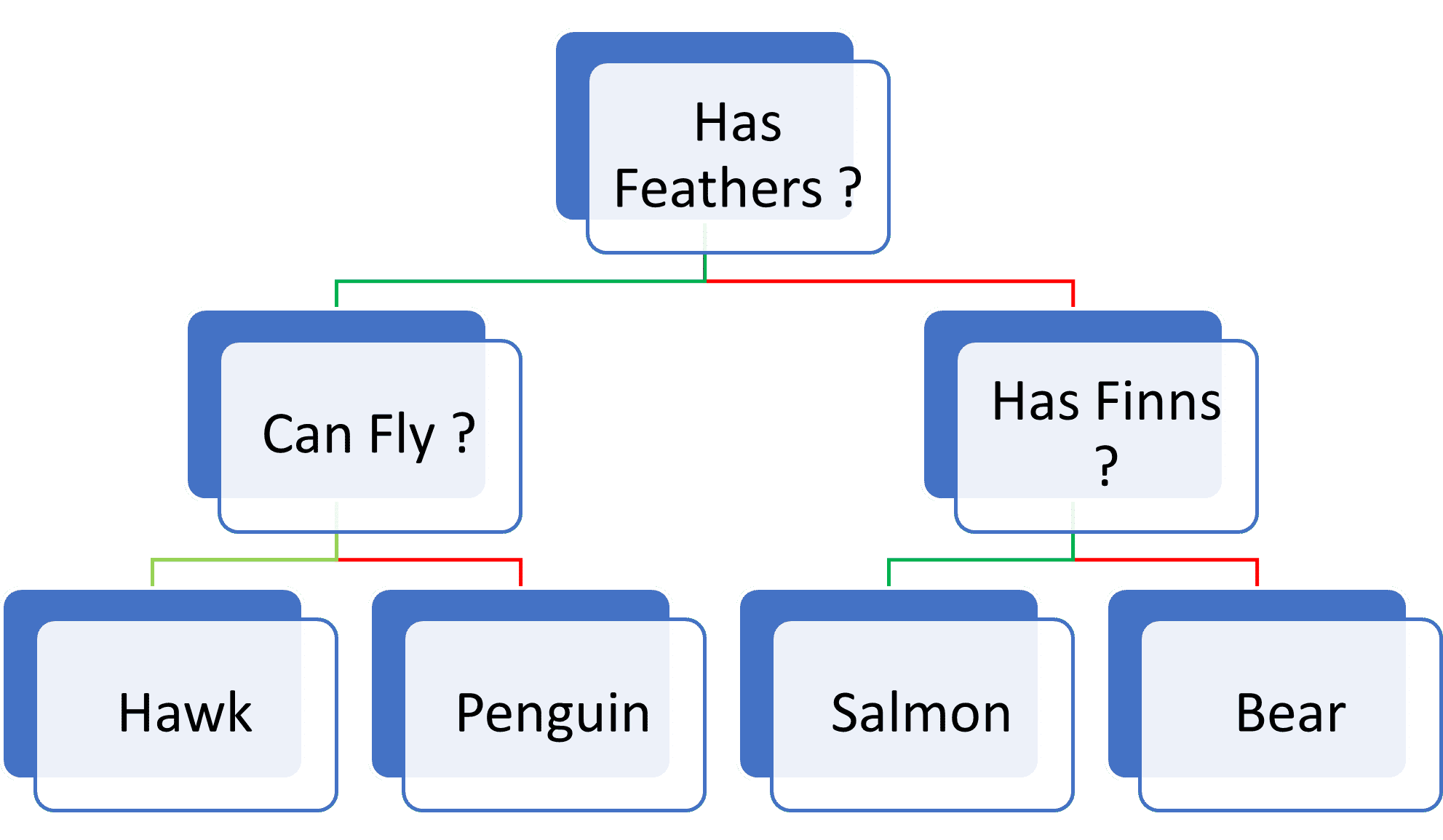

Above is an example of a simple decision tree. Each root represents an input variable (x) and a split at that variable.

Each Leaf represents an output variable (y) which is used to make a prediction.

The above Tree takes an example of an animal as the input variable and depending on the features of the example animal, a branch is selected, this process is repeated until a leaf is reached.

A tree can be derived from training data by using a recursive binary split. Different splits are tried and tested using a cost function. All input variables and all possible splits are evaluated in a greedy manner. (Best split is chosen).

The most common cost function is the mean square of the error. For Example, a tree is created with random splits, a supervised training set is an input into the tree, and the output (y) is returned.

The square of the error is calculated for each member of the training set:

(training set output – y)²

The average square error across the entire tree is calculated. Another random tree is created and the process repeats. After n trees have been created the best tree, i.e. the tree with the smallest average square error, is returned.

Pruning a decision tree refers to removing leaf nodes to improve the performance and readability of the tree.

The quickest and simplest way of pruning a tree is to work through each node and determine the effect on the tree of removing the node. For example, remove a node and then rerun the training set through the tree, if the error has reduced or remained the same remove the node, if the error has increased keep the node.

This blog is a continuation of the Technical Machine Learning Series, it introduced the idea of Non-Linear Machine Learning Algorithms. In this blog, I gave a simple overview of the decision trees, how they work and how they are created.

Alan Lehane, Software Developer

Alan has worked with Aspira/emagine for several years as a Software Developer, specialising in Data Analytics and Machine Learning. He has provided various services to Aspira's clients, including Software Development, Test Automation, Data Analysis and Machine Learning.

Ask us how we can help you succeed.